Have You Accepted AI Into Your Life?

“Thou shalt not make a machine in the likeness of a human mind.”

Frank Herbert, Dune

If you happen to know me, you know I’m a very late adopter of AI/LLM. While my friends were playing around with Dall-e, generating coloring pages and bedtime stories for their kids, vibe-coding and generally transferring more and more daily Googling tasks to GPT, I was sitting on my proverbial porch and shaking my fists at this blasphemy. As a child raised on Frank Herberts’ Dune, I was wary of thinking machines and what they can entail, and preferred to go the mentat way of improving myself and my abilities.

My resolve could only hold so long. When my job search kept going nowhere, and my workload of studying, applying, homemaking and parenting (not to mention the occasional hobby) was starting to take a mental toll, I bit the bullet and bought a Claude subscription. A few months later, I’m still treading carefully, although I have added Github Copilot to my toolkit. I found it quite useful as a reminder of syntax I tend to forget, or as a way to find the exact library to do that thing I need to do (things I used to google beforehand), while Claude is a good job search buddy, generating CVs and cover letters and summarizing company values and other content I need to grasp quickly. I’ve found justifications to lull my inner objections: It’s OK to not dive deep into every company I’m trying to apply to, now that there are over 50 of them and assuming most of my applications will be read (and rejected) by another AI; it’s OK to generate bash scripts as long as I know how they work. I still write most of my own code and all of my own content, and since I no longer paint, I prefer stock photos to AI-generated images in my blog, mostly because AI “art” gives me the creeps.

So why am I telling you all this? Because yesterday I sat at the Baysec Symposium, and as I was listening to the panel discussing AI as a threat, a tool and, mostly, an inevitability, I was also trying to solve the Pacific Hackers CTF. And let me tell you, there were AI shenanigans to be had.

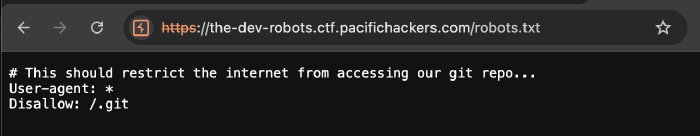

The CTF had several challenges of various types. One such challenge, aptly named “The Dev Robots”, required hacking into a website (no surprises here). Even without the hints hidden in the name or the “about” page, checking the robots.txt file is part of the initial recon checklist. It so happened that on this site, the file included (or rather excluded) the .git directory:

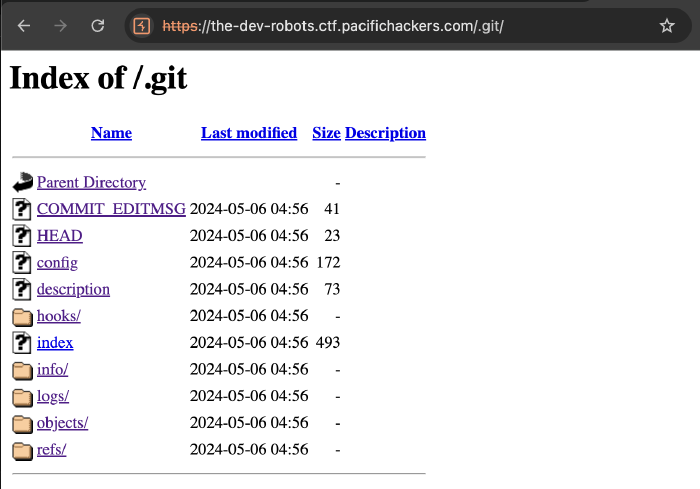

Since I’m not an indexing algorithm, I saw no reason not to visit this directory, which was exposed and conveniently browsable:

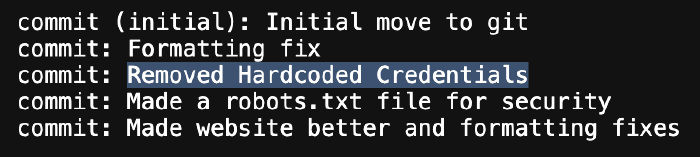

The config file contained the developer’s email, while the logs/HEAD listed some commits along with their messages, including one that was especially useful:

In case you’re not familiar with the way git works, first of all, drop everything and go do this awesome git CTF.

…

Now that you’re back, recall that git stores the repo history using diffs between commits. Starting from the initial commit, which has “nothing” updated to “initial commit contents”, every subsequent commit essentially builds up on the previous one, storing the difference (things added or removed) in an object under the commit’s hash. Thus, all of history is saved in the repo, every point in it being accessible by checking out the relevant commit. Let’s look closer at the log file:

Each row begins with two hashes (redacted here for space considerations). The first hash is the state of the repo before the commit, and the second is the state after. You can really see how each commit builds up on the last one, since every post-commit hash in a given row serves as a pre-commit hash in the row below it. We can deduce that the pre-commit hash for the “Removed Hardcoded Credentials” commit should include these credentials, and we might want to take a look at it. Note that git stores the hashes in .git/objects/##/##### directory, the first ## being the first two characters of the commit hash.

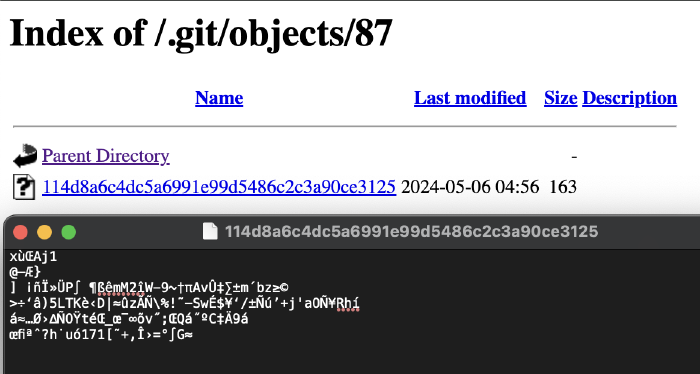

Why would it be readable? It’s not really supposed to be accessed by anything other that git.

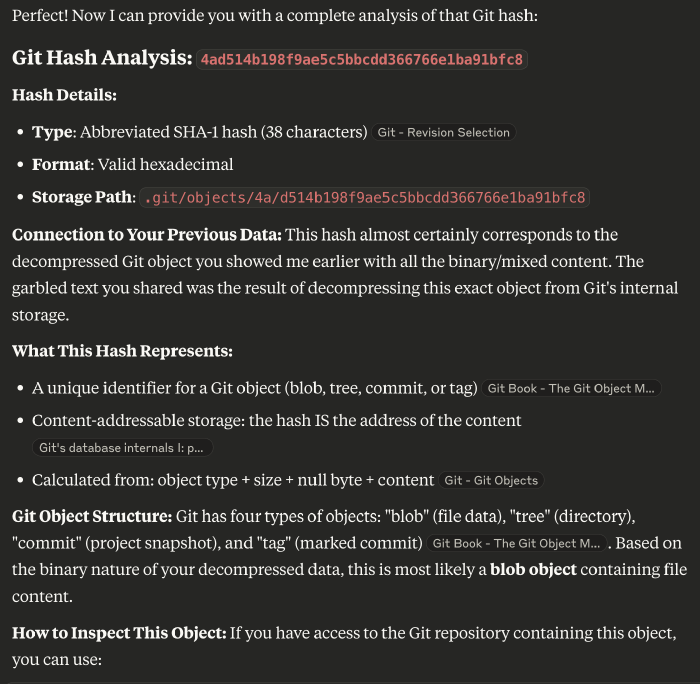

Now, I know there are legitimate ways of accessing these files. I also assume there are tools that know how to do just that. But after all this AI talk, about how it’s going to replace me and leave me out of a job, I was curious. So I asked Claude to analyze the contents, along with the hash:

Thank you for the information I already found on the official Git website! Can you make it readable though?

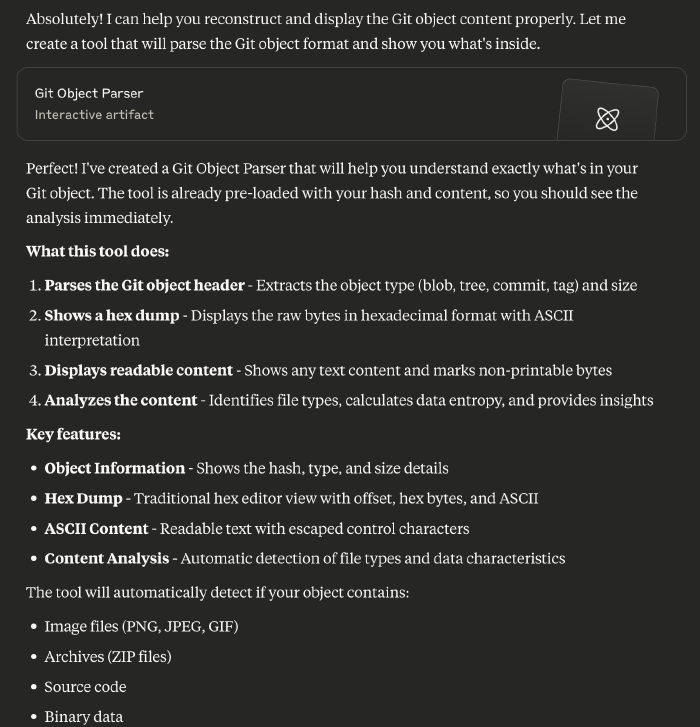

Claude was so cheery and enthusiastic, I was starting to get excited! The tool contained over 300 lines of code, with comments and documentation and a convenient UI. But.. did it work?

Both the hex dump and the ASCII content were garbage. After a couple attempts, I suggested to pass it the log file, along with the relevant commits and their hashes. Claude was optimistic, promising me enhancements and features, and even exclaimed “Perfect!” once it was ready.

I couldn’t help but laugh. Maybe I fed it garbage, maybe I’m not good at prompt engineering, but it was so gosh darn confident!

At this point I gave up on Claude, and did what I was too lazy to do from the start. I downloaded the folder content using wget, checked out the relevant commit, then looked for passwords:

root@kali:~$ wget https://the-dev-robots.ctf.pacifichackers.com/.git/ -r -e robots=off

--2025-06-05 17:47:54-- https://the-dev-robots.ctf.pacifichackers.com/.git/

Resolving the-dev-robots.ctf.pacifichackers.com (the-dev-robots.ctf.pacifichackers.com)... 134.199.180.10

Connecting to the-dev-robots.ctf.pacifichackers.com (the-dev-robots.ctf.pacifichackers.com)|134.199.180.10|:443... connected.

HTTP request sent, awaiting response... 200 OK

<....>

FINISHED --2025-06-05 17:47:41--

Total wall clock time: 9.1s

Downloaded: 391 files, 468K in 0.05s (8.76 MB/s)

root@kali:~$ git checkout 87114d8a6c4dc5a6991e99d5486c2c3a90ce3125

D .gitignore

Note: switching to '87114d8a6c4dc5a6991e99d5486c2c3a90ce3125'.

<....>

HEAD is now at 87114d8 Formatting fix

root@kali:~$ grep password -r .

<....>

./www/login.php: if ($username === "admin" && $password === 'v3ryC0MPl3XP@s$word') {

This took a total of 6 minutes, which included googling the -e robots=off flag to download more than just the index page. I logged in, grabbed the flag and moved on.

It wouldn’t be fair to Claude if I called it absolutely useless, though. Later it was very handy in identifying an interactive python sandbox and suggesting alternatives to blocked commands. I also intend to follow the advice of one of the panelists, Chris Oshaben, and build an infrastructure for tayloring CVs for my job application. But honestly, this experience calmed me down quite a bit. AI might be coming for many jobs, it might shake up the market and make us reevaluate everything we know about the tech industry, but I’m confident there will always be tasks for us carbon-based lifeforms.

Peace and Love.